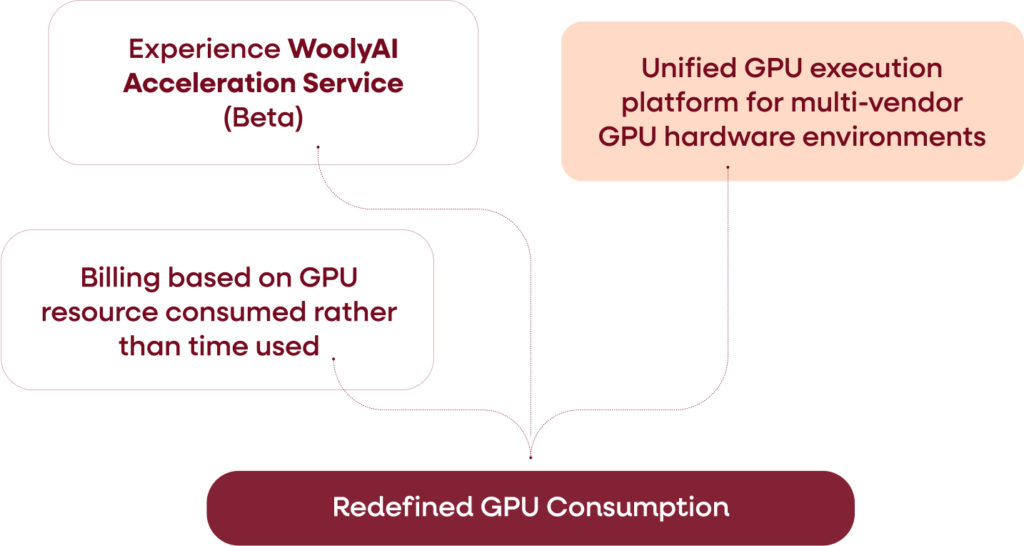

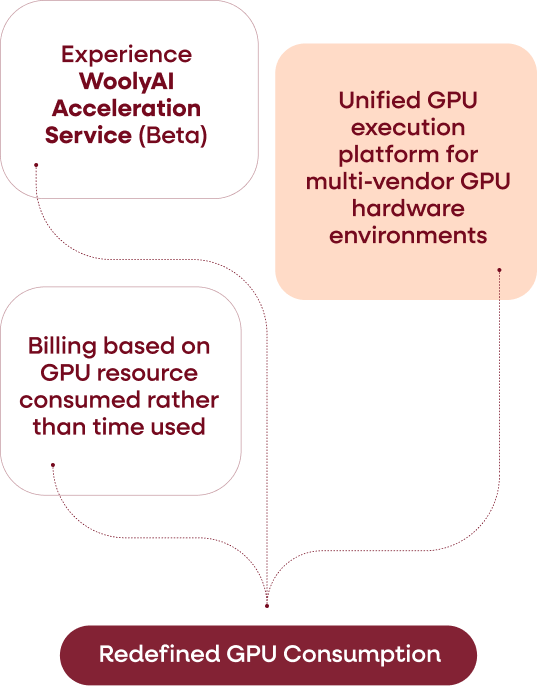

WoolyAI Acceleration Service

A virtual GPU cloud providing scalable GPU memory and processing power

Cloud Service built on top of our CUDA Abstraction Layer

Your billing is based on actual GPU memory usage and core processing utilization

You execute your Pytorch projects in your CPU infrastructure

CUDA instructions are abstracted, transformed, regenerated and execute on WoolyAI GPU cloud service

docker exec wooly-container wooly credits

994239242

docker exec -it wooly-container python3 pytorch-project.py

torch.cuda.get_device_name(0): WoolyAI

model.safetensors: 100%|████████████████████| 3.09G/3.09G [00:14<00:00, 220MB/s]

generation_config.json: 100%|██████████████| 3.90k/3.90k [00:00<00:00, 37.1MB/s]

preprocessor_config.json: 100%|████████████████| 340/340 [00:00<00:00, 3.86MB/s]

tokenizer_config.json: 100%|█████████████████| 283k/283k [00:00<00:00, 4.93MB/s]

added_tokens.json: 100%|████████████████████| 34.6k/34.6k [00:00<00:00, 161MB/s]

Device set to use cuda:0

Coming Soon

Abstracted CUDA execution for cross-platform compatibility

Configures multi-vendor GPU infrastructure and presents it as a virtual GPU Cloud Service.

Can execute unmodified PyTorch CUDA workloads independent of the underlying GPU hardware.

Execute ML with your native container orchestration setup.

Execute concurrent workloads on the cluster and manage SLAs