Media

Brief Introduction to WoolyAI Software

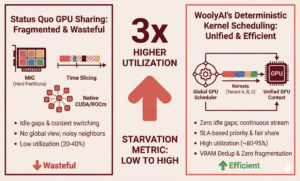

Co-locating multiple jobs on a GPU with deterministic performance

WoolyAI Deterministic Kernel Scheduling on GPU for 3x more utilization

Running ML job on CPU only with Kernel Execution on remote GPU shared pool

Running same ML container on both Nvidia and AMD with no changes

VRAM Memory DeDup to run more LoRA adapter models on each GPU

Blog

A New Approach to GPU Sharing: Deterministic, SLA-Based GPU Kernel Scheduling for Higher Utilization

A New Approach to GPU Sharing: Deterministic, SLA-Based GPU Kernel Scheduling for Higher Utilization Modern GPU-sharing techniques like MIG and time-slicing improve utilization, but they fundamentally operate by partitioning or alternating access to the device—leaving large amounts of GPU compute

December 7, 2025

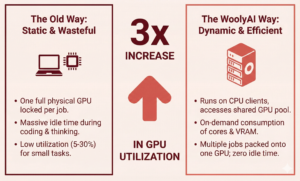

Triple Your GPU Utilization in ML Development with WoolyAI

Stop letting idle GPUs drain your budget during the experimentation phase. Machine learning development is expensive, but it doesn’t have to be wasteful. In the typical ML lifecycle, the “experimentation, dev, and test” stages are notorious for low GPU utilization.

December 4, 2025